Welcome to Doom9's Forum, THE in-place to be for everyone interested in DVD conversion. Before you start posting please read the forum rules. By posting to this forum you agree to abide by the rules. |

|

|

#21 | Link | |

|

Registered User

Join Date: Mar 2018

Posts: 447

|

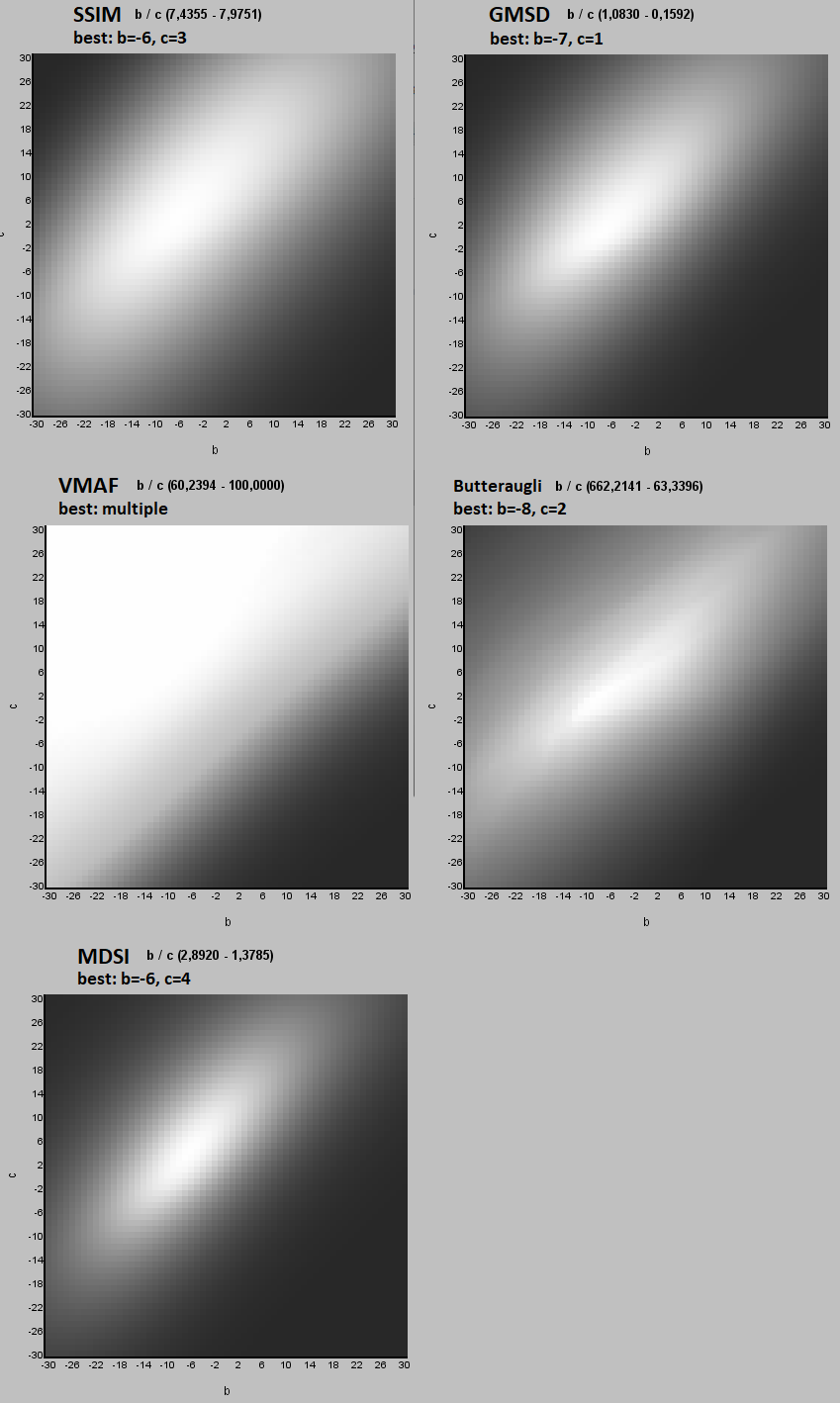

Quote:

I ran some exhaustive tests with your clip, I set the divider to 10.0 instead of 100.0 to make it faster. The results were interesting:  SSIM and GMSD are roughly in agreement where the best settings are. SSIM had best at b=-6 c=3. Note that this is equivalent to b=-60 c=30 with your original script which is very close to your best result b=-59, c=31. GMSD's best is at b=-7 c=1, a bit further away from your result b=-77 c=5. GMSD is a bit more "focused", it seems to see the differences better than SSIM. VMAF on the other hand gives many "perfect" results and when we look at only those the center is very close to the top left coordinates b=-30 c=30. I think VMAF is trying to say that the differences are so small that they're not perceptible to average human. Have you tried eyeballing the different settings? It is however a bit of a mystery why VMAF has the center of best results in a different place than SSIM and GMSD. Last edited by zorr; 8th February 2019 at 01:51. |

|

|

|

|

|

|

#22 | Link |

|

Pig on the wing

Join Date: Mar 2002

Location: Finland

Posts: 5,733

|

To be honest, without zooming in, it's not easy to see the differences of the optimal parameters provided by the various metrics. My guess is that VMAF is not the best method for these single frame comparisons but has much better value with moving video. GMSD looks quite promising with these scaling comparisons.

__________________

And if the band you're in starts playing different tunes I'll see you on the dark side of the Moon... |

|

|

|

|

|

#23 | Link | |

|

Registered User

Join Date: Dec 2005

Location: Germany

Posts: 1,795

|

muvsfunc added a new similarity metric: MDSI(Mean Deviation Similarity Index) https://github.com/WolframRhodium/mu...0cf0cb6b1db304

Quote:

It detects even the smallest change very reliably.

__________________

AVSRepoGUI // VSRepoGUI - Package Manager for AviSynth // VapourSynth VapourSynth Portable FATPACK || VapourSynth Database Last edited by ChaosKing; 8th February 2019 at 16:53. |

|

|

|

|

|

|

#24 | Link |

|

Registered User

Join Date: Dec 2005

Location: Germany

Posts: 1,795

|

I've added butteraugli and mdsi to zoptilib https://pastebin.com/511BcmNp

__________________

AVSRepoGUI // VSRepoGUI - Package Manager for AviSynth // VapourSynth VapourSynth Portable FATPACK || VapourSynth Database |

|

|

|

|

|

#26 | Link | ||

|

Registered User

Join Date: Mar 2018

Posts: 447

|

Quote:

MDSI seems promising based on the authors' paper. It requires clips to be RGB. Boulder, is your source material in BT 709 colorspace? I'm not sure if Zopti should automatically do the conversation or just require RGB. MDSI by default downscales the clips by 2 which is kinda counterproductive when trying to measure the effects of scaling. I will add a method to set the downscaling value. The current MDSI implementation in muvsfunc returns "inf" for one of the frames and the script doesn't finish. Perhaps it's not ready for prime time (since it's not even released yet). Butteraugli can indeed measure small differences, that's its main purpose. So it may not be accurate with large differences but should work well for Boulder's use case. It's also slow. I mean slooooow... one iteration (8 frames) takes 22 seconds on my machine.  Butteraugli also needs RGB and it needs to be gamma corrected... if I'm interpreting it correctly clips should be in linear RGB space. How does one do that conversion with Vapoursynth? Butteraugli also needs RGB and it needs to be gamma corrected... if I'm interpreting it correctly clips should be in linear RGB space. How does one do that conversion with Vapoursynth?Quote:

Last edited by zorr; 9th February 2019 at 00:12. Reason: Corrected Buteraugli speed |

||

|

|

|

|

|

#28 | Link | |

|

Registered User

Join Date: Mar 2018

Posts: 447

|

Quote:

Code:

import vapoursynth as vs import resamplehq as rhq from zoptilib import Zopti core = vs.core orig = core.ffms2.Source(source=r'blacksails.avi') # convert to RGB orig = core.fmtc.resample(clip=orig, css="444") orig = core.fmtc.matrix(clip=orig, mat="709", col_fam=vs.RGB) orig = core.fmtc.bitdepth(clip=orig, bits=8) zopti = Zopti(r'result.txt', metrics=['mdsi', 'time']) # initialize output file and chosen metrics b = -30/10.0 # optimize b = _n_/10.0 | -30..30 | b c = -30/10.0 # optimize c = _n_/10.0 | -30..30 | c alternate = rhq.resample_hq(orig, width=1280, height=720, kernel='bicubic', filter_param_a=b, filter_param_b=c) alternate = core.resize.Bicubic(alternate, width=3840, height=2160, filter_param_a=0, filter_param_b=0.5) orig = core.resize.Bicubic(orig, width=3840, height=2160, filter_param_a=0, filter_param_b=0.5) zopti.run(orig, alternate) You need the zoptilib version ChaosKing modded, here. Oh and thanks for the muvsfunc library, the similarity metrics are perhaps the most important ingredient on making an optimizer like zopti work!

|

|

|

|

|

|

|

#29 | Link | |

|

Registered User

Join Date: Jan 2016

Posts: 162

|

Quote:

Thank you for your support and recognition. Your work on Zopti is impressive. |

|

|

|

|

|

|

#30 | Link | |

|

Registered User

Join Date: Mar 2018

Posts: 447

|

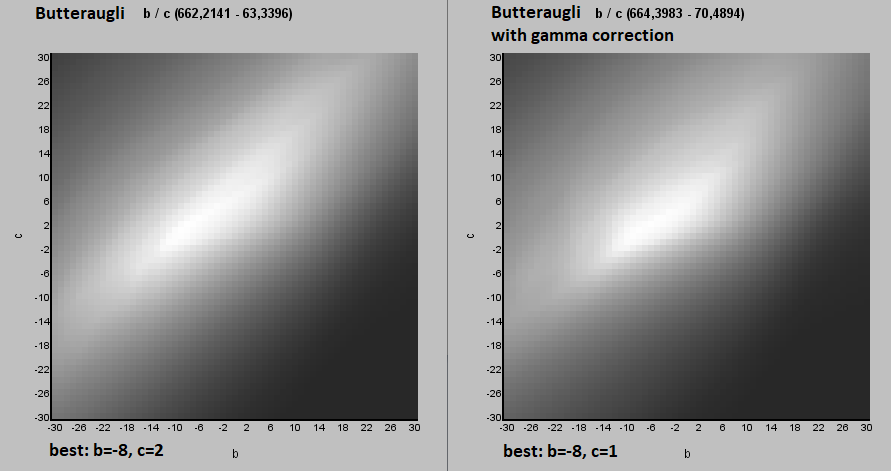

Quote:

Butteraugli is not as smooth function as the others especially near the best value. It's more focused than SSIM and slightly more focused than GMSD near the best value. Best value at b=-8, c=2 which again quite nicely agrees with SSIM and GMSD. It took almost 24 hours to create that picture, hopefully I don't have to do that again... but of course I have to because this was calculated without gamma correction. But I think I now know how to do it: Code:

# convert to linear RGB orig = core.fmtc.resample(clip=orig, css="444") orig = core.fmtc.matrix(clip=orig, mat="709", col_fam=vs.RGB) orig = core.fmtc.transfer(orig, transs="709", transd='linear') # to linear RGB orig = core.fmtc.bitdepth(clip=orig, bits=8) All except VMAF are giving best b within [-6 .. -8] and best c within [1 .. 4]. So for this use case it probably doesn't matter which one of those is chosen. More tests are needed to determine which one works best for other use cases. Thanks. Are you planning on implementing more similarity metrics? Of course quality matters more than quantity but it's always possible to optimize for more than one similarity metric and there might be some useful combinations. |

|

|

|

|

|

|

#31 | Link | |

|

Registered User

Join Date: Jan 2016

Posts: 162

|

Quote:

Anyway, you can set 'downsample=False' in SSIM to skip downsampling. I think those full-reference metrics are highly correlated. |

|

|

|

|

|

|

#32 | Link | ||

|

Registered User

Join Date: Mar 2018

Posts: 447

|

Quote:

What's your opinion on WaDIQaM / DIQaM? The paper is here and someone did a PyTorch implementation of WaDIQaM. It can also work as full reference metric. Quote:

Agreed, at least in this particular case. I compared SSIM and GMSD with denoising and there SSIM had more ringing artifacts. |

||

|

|

|

|

|

#33 | Link | |

|

Registered User

Join Date: Jan 2016

Posts: 162

|

Quote:

Porting it to VapourSynth is not hard. I will check it later. |

|

|

|

|

|

|

#34 | Link | |

|

Registered User

Join Date: Mar 2018

Posts: 447

|

Quote:

Thanks, it will be interesting even if it doesn't perform well in real-world tests. |

|

|

|

|

|

|

#35 | Link | |

|

Registered User

Join Date: Mar 2018

Posts: 447

|

Quote:

There are some changes, nothing radical but the gamma corrected version is slightly smoother. Best value has moved from c=2 to c=1. |

|

|

|

|

|

|

#36 | Link |

|

Registered User

Join Date: Jul 2016

Posts: 39

|

From what I can tell this plugin is still being actively developed. Is it in a finished enough state that it is usable? and if so, is there any documentation on how to use it?

My goal would be to use it to find settings for MVtools to interpolate frames for anime at the highest possible quality. Motion interpolation normally fails with animated content, and so I would like to see how well it works in a best case scenario with optimized settings. I'm working on trying to restore a field blended show, and if this works well enough, it would be very helpful for me. |

|

|

|

|

|

#37 | Link |

|

Registered User

Join Date: Mar 2018

Posts: 447

|

Zopti version 1.0.1-beta released, see the first post.

Here's an example on how to use the new features: -no #output line -using MDSI metric with (semi)automatic RGB conversion Code:

# read input video video = core.ffms2.Source(source=r'd:\process2\1 deinterlaced.avi') # initialize metrics, specify YUV color matrix for RGB conversion (MDSI and Butteraugli need RGB) zopti = Zopti(r'results.txt', metrics=['mdsi', 'time'], matrix='601') ... process the video ... # measure similarity of original and alternate videos, save results to output file zopti.run(orig, alternate) Last edited by zorr; 19th February 2019 at 00:16. Reason: Added code example |

|

|

|

|

|

#38 | Link | ||

|

Registered User

Join Date: Mar 2018

Posts: 447

|

Quote:

Augmented Script (part 1/2) Augmented Script (part 2/2) Hands-on tutorial (part 1/2) Hands-on tutorial (part 2/2) Optimizer arguments The optimizer is fully functional, the remaining issues are mostly about how to use it most effectively. For example to figure out things like

Quote:

Code:

import vapoursynth as vs from zoptilib import Zopti core = vs.core TEST_FRAMES = 10 # how many frames are tested MIDDLE_FRAME = 50 # middle frame number # read input video video = core.ffms2.Source(source=r'd:\process2\1 deinterlaced.avi') orig = video # initialize Zopti - starts measuring runtime zopti = Zopti(r'results.txt', metrics=['ssim', 'time']) # you could add preprocessing here to help MSuper - not used here searchClip = orig super_pel = 4 # optimize super_pel = _n_ | 2,4 | super_pel super_sharp = 2 # optimize super_sharp = _n_ | 0..2 | super_sharp super_rfilter = 2 # optimize super_rfilter = _n_ | 0..4 | super_rfilter super_search = core.mv.Super(searchClip, pel=super_pel, sharp=super_sharp, rfilter=super_rfilter) super_render = core.mv.Super(orig, pel=super_pel, sharp=super_sharp, rfilter=super_rfilter, levels=1) blockSize = 8 # optimize blockSize = _n_ | 4,8,16,32,64 ; min:divide 0 > 8 2 ? ; filter:overlap overlapv max 2 * x <= | blockSize searchAlgo = 5 # optimize searchAlgo = _n_ | 0..7 D | searchAlgo searchRange = 2 # optimize searchRange = _n_ | 1..10 | searchRange searchRangeFinest = 2 # optimize searchRangeFinest = _n_ | 1..10 | searchRangeFinest _lambda = 1000*(blockSize*blockSize)/(8*8) # optimize _lambda = _n_ | 0..20000 | lambda lsad=1200 # optimize lsad=_n_ | 8..20000 | LSAD pnew=0 # optimize pnew=_n_ | 0..256 | pnew plevel=1 # optimize plevel=_n_ | 0..2 | plevel overlap=0 # optimize overlap=_n_ | 0,2,4,6,8,10,12,14,16 ; max:blockSize 2 / ; filter:x divide 0 > 4 2 ? % 0 == | overlap overlapv=0 # optimize overlapv=_n_ | 0,2,4,6,8,10,12,14,16 ; max:blockSize 2 / | overlapv divide=0 # optimize divide=_n_ | 0..2 ; max:blockSize 8 >= 2 0 ? overlap 4 % 0 == 2 0 ? min | divide globalMotion = True # optimize globalMotion = _n_ | False,True | globalMotion badSAD = 10000 # optimize badSAD = _n_ | 4..10000 | badSAD badRange = 24 # optimize badRange = _n_ | 4..50 | badRange meander = True # optimize meander = _n_ | False,True | meander trymany = False # optimize trymany = _n_ | False,True | trymany # delta 2 because we interpolate a frame that matches original frame delta = 2 useChroma = True bv = core.mv.Analyse(super_search, isb = True, blksize=blockSize, search=searchAlgo, searchparam=searchRange, pelsearch=searchRangeFinest, chroma=useChroma, \ delta=delta, _lambda=_lambda, lsad=lsad, pnew=pnew, plevel=plevel, _global=globalMotion, overlap=overlap, overlapv=overlapv, divide=divide, badsad=badSAD, \ badrange=badRange, meander=meander, trymany=trymany) fv = core.mv.Analyse(super_search, isb = False, blksize=blockSize, search=searchAlgo, searchparam=searchRange, pelsearch=searchRangeFinest, chroma=useChroma, \ delta=delta, _lambda=_lambda, lsad=lsad, pnew=pnew, plevel=plevel, _global=globalMotion, overlap=overlap, overlapv=overlapv, divide=divide, badsad=badSAD, \ badrange=badRange, meander=meander, trymany=trymany) # NOTE: we disable scene change detection by setting thSCD1 very high blockChangeThreshold = 10000 maskScale = 70 # optimize maskScale = _n_ | 1..300 | maskScale inter = core.mv.FlowInter(orig, super_render, bv, fv, time=50, ml=maskScale, thscd1=blockChangeThreshold, thscd2=100, blend=False) # for comparison original must be forwarded one frame orig = orig[1:] # cut out the part used in quality / speed evaluation inter = inter[MIDDLE_FRAME - TEST_FRAMES//2 + (1 if (TEST_FRAMES%2==0) else 0) : MIDDLE_FRAME + TEST_FRAMES//2 + 1] orig = orig[MIDDLE_FRAME - TEST_FRAMES//2 + (1 if (TEST_FRAMES%2==0) else 0) : MIDDLE_FRAME + TEST_FRAMES//2 + 1] zopti.run(orig, inter) |

||

|

|

|

|

|

#39 | Link |

|

Registered User

Join Date: Mar 2018

Posts: 447

|

@ChaosKing, I stole your improvements to zoptilib, added some of my own and packaged it to the latest Zopti installation zip.

Perhaps we could agree on how to do the updates in the future. We could use your Github repository but I'd need to have write permissions there. Is that possible? |

|

|

|

|

|

#40 | Link |

|

Registered User

Join Date: Dec 2005

Location: Germany

Posts: 1,795

|

Yes it is possible. I can add you as a Collaborator.

You could also make a new repo and/or clone mine and just accept Pull requests.

__________________

AVSRepoGUI // VSRepoGUI - Package Manager for AviSynth // VapourSynth VapourSynth Portable FATPACK || VapourSynth Database |

|

|

|

|

|

|