Zopti is what was called AvisynthOptimizer. The name was changed because it now supports VapourSynth as well. This first post is an introduction similar to

this one on the Avisynth Development forum, just changed a bit to reflect the latest features and what is possible with VapourSynth. I also cut out some unnecessary blabbering.

Let's say you want to motion compensation to double frame rate or replace a few corrupted frames. The right tool for that job is obviously MVTools. The only problem now is finding good parameters to get the best quality (because every video needs a little different settings). Ok no problem, I will just quickly adjust these... wait... there are about 60 parameters to adjust! Finding good settings would involve *a lot* of manual testing. This is where Zopti jumps in and says "Don't worry pal, I will find the best settings for you!".

But how? A VapourSynth script is first "augmented" by adding instructions on which parameters should be optimized. The instructions are written inside comments so the script will continue to work normally. The script also needs to measure the quality of the processed video using current parameter values or measure the running time (or preferably both the quality and runtime). The script needs to write these results to a specific file. There is a helper Python library "zoptilib" you can use to do the quality/runtime measurements and write the file.

Now you might be wondering how on earth can the script measure the quality. I can only think of one way: to compare the frames to reference frames and measure the similarity. The closer the similary value, the better the quality. VapourSynth has (at least) these similarity metrics available:

SSIM (the classic, most well known),

GMSD (seems better than SSIM) and

VMAF (by Netflix). It's also possible to get

MS-SSIM (multi-scale SSIM) from the VMAF plugin and I also implemented

B-SSIM for AviSynth (SSIM with blurring adjustment).

Ok, but where do we get the reference frames? In case of MVTools we can use the original frames as reference frames and the script will try to reconstruct them using motion compensation (but it's not allowed to use the reference frame in the reconstruction). We can do something similar if we want to use MVTools to double the framerate: we first create the double-rate video, then remove the original frames from it, then double the framerate again and finally compare these to the original frames. Or you could reconstruct every odd frame using the even frames. This idea is not limited to MVTools, you could for example do color grading using some other software and then try to recreate the same result using VapourSynth. I'm sure the smart people here will find use cases I couldn't even dream about.

MVTools is a complex plugin and the settings can change the runtime of the script dramatically. In addition to quality we can also measure the runtime to find settings that are fast enough (for example if you want to use the script in real-time).

So if the purpose is to find the best settings, what do we consider the "best" when both quality and time are involved? For example we can have settings A with quality 99 (larger is better), time 2300ms (larger is worse) and settings B with quality 95 and time 200ms. Which one of these is better? We can use the concept of pareto domination to answer this question. When one solution is at least as good as the other solution in every objective (in this case the objectives are quality and speed) and better in at least one objective, it dominates the other solution. In this example, neither dominates the other. But if we have settings C with quality 99 and time 200ms it dominates both A and B. In the end we want to know all the nondominated solutions, which is called the pareto front. So there's going to be a list of parameters with increasing quality and runtime. You can have more than two objectives if you want, the same pareto concept works. There's a good and free ebook called "

Essentials of Metaheuristics" which describes the pareto concept and much more.

Zopti reads the augmented script, verifies it and starts running a metaheuristic optimization algorithm. There are currently four algorithm choices: NSGA-II, SPEA2, mutation and exhaustive. The first two are some of the best metaheuristic algorithms available and also described in the ebook mentioned above. The mutation is my own simplistic algorithm (but it can be useful if you're in a hurry since it's the most efficient). Exhaustive will try all possible combinations, it's useful if you only have a small number of them (you could for example do an exhaustive search on one parameter value only). All the metaheuristic algorithms are working in a similar manner generating different solutions and testing them. The optimizer does these tests by creating scripts with certain parameter values and running them. The script then writes the measurements (quality/time) into a file which the optimizer reads. The metaheuristic then decides which parameters to try next based on the results. This continues until some end criteria is met.

There are three different ending criterias: number of iterations, time limit and "dynamic". Number of iterations is just that, the algorithm runs the script specific number of times. Setting a time limit can be pretty useful if you know how much time you can use for the optimization. You could for example let it run overnight for 8 hours and see the results in the morning. Dynamic variation is stopping only when it doesn't make any progress anymore. Making progress is defined by "no more pareto front members in last x iterations". This can be useful if you want to find the best possible results regardless of how long it takes.

During the optimization all tested parameters and their results are written to a log file. This log file can be "evaluated" during and after the optimization process. The evaluation basically means finding the pareto front and showing it. You can also create scripts from the pareto front in order to test them yourself. It's also possible to visualize the results of the log file in a two dimensional scatter chart. This chart highlights the pareto front and shows all the other results too. The chart can also be "autorefreshing": it loads the log file every few seconds and updates the visuals, which is a fun way to track how the optimization is progressing. Here's a gif what it looks like (obviously sped up):

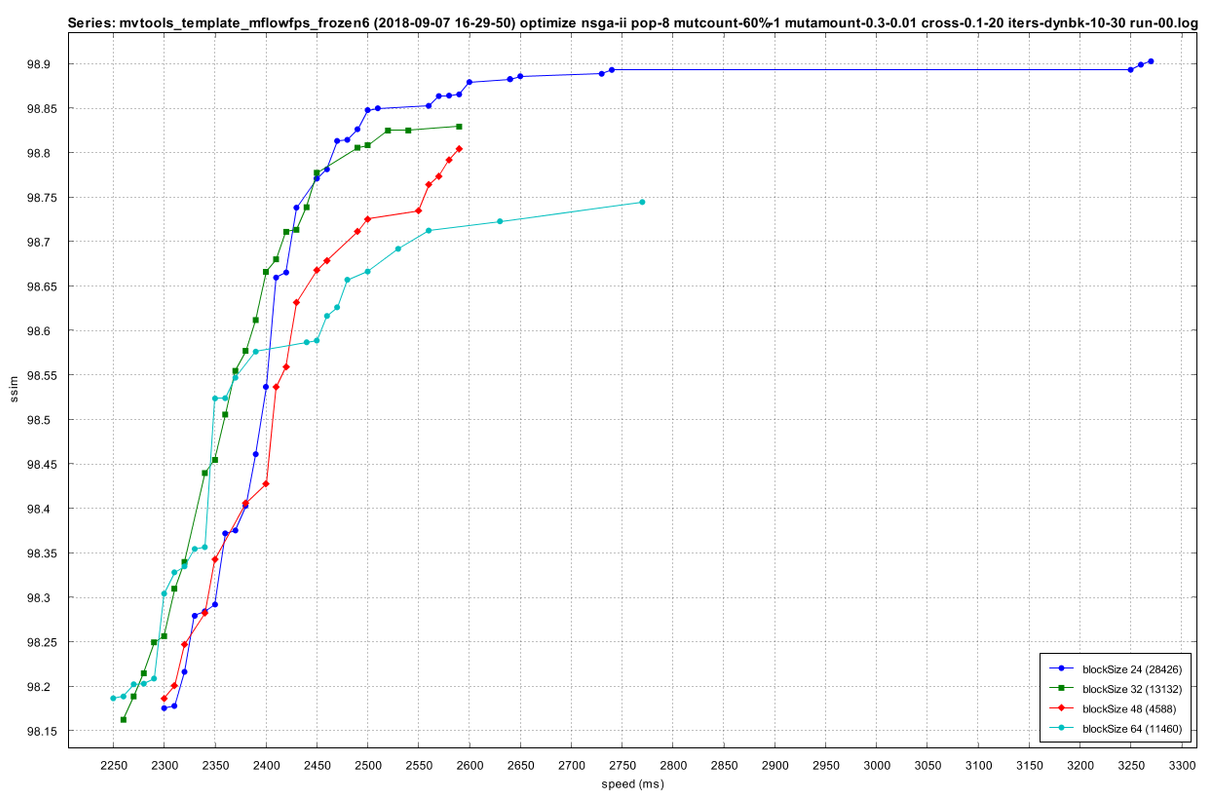

The visualization has a few other bells and whistles but one I'd like to highlight here is the group by functionality: you can group the results by certain parameter's values and show a separate pareto front for each value. For example this what grouping by MVTools' blocksize looks like:

Another visualization mode draws a heat map where two parameters are chosen for the x and y axis, the color of the cell at x,y is the best result found with that parameter combination, brighter color meaning better result.

Measuring the script's runtime is not very accurate, ie it has some variation. All the other processes running at the same time are using CPU cycles and messing with the cache so you should try to minimize other activity on the computer. In order to get more accurate results you can run a validation on the finished results log file. In validation the idea is to run the pareto front results multiple times and calculate the average, median, minimum or maximum of these multiple measurements (you can decide which one(s)).

So how good is the optimizer? Let's take a look at one example. A while back there was a

thread about best motion interpolation filters. There's a test video with a girl waving her hand. The best options that I know of are John Meyer's jm_fps script and FrameRateConverter. Here's a comparison gif with those two and Zopti. FramerateConverter was run with preset="slowest".

Now obviously I'm showing a bit of a cherry-picked example here. The optimizer was instructed to search the best parameters for this short 10 frame sequence. I have run most of my optimization runs using only 10 frames because otherwise the optimization takes too long. Ideally the optimizer would automatically select the frames from a longer video, I have started working on such a feature but it's not finished yet (got sidetracked to implement a scene change detector...) so for now user has to make the selection.

At this point I envision that Zopti can be an useful tool for plugin authors so they can test plugin parameters and try to search for the optimal ones. Zopti can also be a bug hunter, it has found several bugs with the Avisynth MVTools by Pinterf which he has since fixed. The VapourSynth MVTools is currently not yet as robust, but jackoneill has already fixed one bug (thanks!). At some later point Zopti could be useful for normal users, when combined with a script with limited search space so that the search will not take excessively long time. Zopti (or rather the previous version AvisynthOptimizer) has already been used by some courageous people from these forums for scaling, motion compensation, denoising and HDR to SRD tone-mapping. I'd like to thank them all for providing important feedback, feature requests and bug reports.

You can download the latest Zopti version

here. I will keep this link updated.

Version history:

1.2.3 (full details

here)

- new mode: convert

-converts Zopti log file into other formats for easier importing into other software such as Excel or Google Docs

-supports sorting of the rows by result or parameter value using the option -sort, for example "-sort GMSD" sorts by GMSD

-output formats: CSV and zopti

-example: zopti -mode convert -format csv -sort dct time (sorts the latest log file by parameter dct and result time and writes a new file in csv format)

-example: zopti -mode convert -log "./path/abc run-01.log" (converts file "./path/abc run-01.log" into a new csv file)

- improvements to visualization mode: line

-now supports option -types which controls which aggregate lines are displayed in the chart

-valid values are "best", "worst", "average", "median", "count" and "samples"

- display of option -groupby improved, pareto fronts can no longer be cut off the screen

- option -continue also supported with mutation algorithm

- validation mode behavior change: it now validates all the results in the log file and not just the pareto front

1.2.2- supports a new way for an Avisynth script to write the result file

-first line is the number of frames (and also the number of actual result lines to be written), use for example WriteFileStart(resultFile, "FrameCount()")

-then come the per frame result lines in any order, using the same format as before

-do not write the last line which starts 'stop', it's no longer necessary and might cause trouble if used with the new way

-this also means that calculating the sum of similarity metric or time is not needed, Zopti calculates those instead

-old method still works as well, Zopti differentiates between them by looking at the first line (if it's a positive integer -> new way)

-new way is safer as the last 'stop' line is no longer needed (it has the be the last line if used and that is hard to guarantee especially when the script is executed multithreaded)

- bugfix: when using -threads > 1 it was possible for two threads to use the same result file which may have resulted in reporting invalid result for one thread or the same result for both threads

- bugfix: -vismode line always drew the minimum result per parameter value even when the goal was to maximize the result value

1.2.1- new option: retry

-tries to run the script again this many times if execution fails

-can be useful if the script uses plugins which are not 100% reliable and can crash

-default value is zero (do not retry)

-example: zopti script.avs -pop 24 -iters 10000 -retry 4

- new visualization mode: line

-draws a line chart of the best found result per all tested values of certain optimized parameter (given with option -param)

-accepts parameter -range to limit the displayed values to certain range

-black line shows the best values, blue line indicates the number of results per value

-a red dot is displayed at the best found value (multiple dots possible if there are multiple values with the same best result)

-example: zopti -mode evaluate -vismode line -param lambda -range 1000 5000 (displays the best result of lambda values between 1000 and 5000)

- a shutdown hook will terminate all the running subprocesses if Zopti is aborted (for example with CTRL + C)

- mode -validate also tests that the first result value (usually quality) is the same as before, gives a warning message if they differ

- reading and parsing the log file with -autorefresh true is now MUCH faster by reading the file backwards and only adding the new results (previously up to several seconds, now < 1 ms)

- scripts ending .py also correctly detected as VapourSynth scripts (previously only .vpy was detected)

- support for custom output properties written by the script (only in VapourSynth scripts for now)

- better support for displaying results from output files with different parameters (scripts can have different parameters and still be compared in the same chart)

- option -continue now also supported by exhaustive algorithm (when using more than one thread)

- order of runs in a visualization is now based on file name, not by modified date

- all chart types now support the window size in option -shot (example: zopti -mode evaluate -shot 1200x800)

- visualization mode seriespareto no longer contains the global pareto line

- VapourSynth script output files will be interpreted even when VapourSynth reports failed execution (sometimes file still has complete data)

- bugfix: using -alg mutation and threads < population size would stall progress after initial generation due to parameter queue being too small

- bugfix: fixed a memory leak in XChart (at least partially)

Pre-1.2.1 history removed due to character count limitation.

The next post will be a hands-on denoising tutorial. Stay tuned.