Welcome to Doom9's Forum, THE in-place to be for everyone interested in DVD conversion. Before you start posting please read the forum rules. By posting to this forum you agree to abide by the rules. |

|

|

#21 | Link | |

|

Lost my old account :(

Join Date: Jul 2017

Posts: 324

|

Quote:

|

|

|

|

|

|

|

#22 | Link | |

|

Registered User

Join Date: May 2009

Posts: 331

|

Quote:

I did something similar to The Stilt, when he was wrong and just called him a random haha. Thanks for posting everything in one place! It will make things far more easier to compare once we get some runs from Ryzen 2! |

|

|

|

|

|

|

#23 | Link |

|

Registered Developer

Join Date: Mar 2010

Location: Hamburg/Germany

Posts: 10,346

|

Since we're liking to be accurate:

Its either Zen 2, or Ryzen 3000. Ryzen 2 is misleading, and not a term used by AMD, since Ryzen 3/5/7/9 are model-classes in their lineup, so it could easily be mistaken for a lower-end model - and next generation there would be even more real confusion.

__________________

LAV Filters - open source ffmpeg based media splitter and decoders Last edited by nevcairiel; 27th June 2019 at 10:58. |

|

|

|

|

|

#24 | Link | |

|

Registered User

Join Date: Jan 2019

Posts: 9

|

Quote:

1. An old version of x265 [2.2+15-a18ab7656c30]. 2. An old version of FFmpeg [N-82889-g54931fd]. 3. The five test sources, which are all 8-bit, all 4:2:0, all 16:9 1080p. As for the benchmark itself: 1. As one can't test a given CPU in vacuo, one actually tests an ensemble of CPU+MB+RAM+IO. Rephrasing: one adds degrees of freedom to the system, together those serve the purpose of propagating the uncertainty. 2. As one doesn't test x265 on its own, due to the way the benchmark is designed, one actually tests the pair of FFmpeg+x265. Another degree of freedom, another potential path for the propagation of uncertainty. 3. As the benchmark uses an old version of x265, all the results which are obtained are actually "watermarked" by the common reference frame of a 2.2 x265 encoder. One can't extrapolate the results to a 3.1 x265 encoder, and assume them to hold true by default. 4. As the benchmark tests the default configuration of parameters for x265 (i.e. --preset medium), all the results which are obtained are actually "watermarked" by the common reference frame of that particular preset. One can't extrapolate the results to another preset (or another parameter configuration), and assume them to hold true by default. 5. The benchmark doesn't test a single low complexity source, or a single 10-bit source, or a single non-4:2:0 source, or a single non-FHD source. All the results are again "watermarked" by a highly specific encoding scenario. One can't extrapolate them to another encoding scenario, and assume them to hold true by default. Do you understand what is the actual result of the benchmark you are providing? It enables one to say: I am encoding this particular source of this particular complexity (at this particular resolution, this particular bit depth, this particular chroma subsampling), within this particular software environment (OS, x265, FFmpeg), on this particular machine (CPU, MB, RAM, IO). It certainly does not enable one to say: CPU MMM achieves an average of abc% better FPS than CPU NNN, across all possible/conceivable encoding scenarios. At this point, I'd simply reiterate my initial statement: I would advise a healthy dose of skepticism whenever you see results without extensive coverage of the testing methodology. |

|

|

|

|

|

|

#25 | Link | |

|

RipBot264 author

Join Date: May 2006

Location: Poland

Posts: 7,812

|

Quote:

BTW. Your requirements for "proper" benchmark are just insane and totally unrealistic! I advise you to stop using any software because there are countless variables affecting performance of your machine (including phases of the moon).

__________________

Windows 7 Image Updater - SkyLake\KabyLake\CoffeLake\Ryzen Threadripper Last edited by Atak_Snajpera; 27th June 2019 at 16:13. |

|

|

|

|

|

|

#26 | Link |

|

Registered User

Join Date: Jan 2019

Posts: 9

|

You're being deliberately obtuse, I suppose I can attempt a simplistic analogy by referring to a system with only two degrees of freedom.

I want to investigate what happens to water when I heat it up. To be rigorous, that's the so-called "pure water" which is known today as UPW. At a pressure of 1 atm, I observe that water is gaseous at 400 K. Conducting a second experiment, I observe that water is gaseous at 500 K too. At this point I ask myself: can I consider the previous results a good predictor of what would happen to water when it's heated to 600 K instead? Can I simply assume that it would be gaseous, thus not having to actually perform a test? The answer to that is no, a proper phase diagram shows what happens to water heated at 600 K and highlights the critical importance of the other parameter of this simplistic system. To return to the discussion of benchmarking encoding times, we're now looking at a system with dozens of degrees of freedom: the parametric space of the hardware, the parametric space of the software, the parametric space of the source to be encoded, the parametric space of the encoding process itself. This entire thread was initiated by explicitly mentioning UHD/4K encoding scenarios. It's right in front of your eyes, mentioned both in the title and the leading post. To which you've replied (here) by implicitly assuming that the results of FHD benchmarking scenarios (highly specific FHD encoding scenarios, as already pointed out) will simply hold true even if one particular parameter varies drastically, claiming that increasing the resolution four times doesn't alter the expected result. To which I replied that an implicit assumption is not sound, as there is an explicit need of additional testing to account for the variable parameter(s). |

|

|

|

|

|

#27 | Link | |

|

Registered User

Join Date: Aug 2010

Location: Athens, Greece

Posts: 2,901

|

Quote:

Could you propose an existent benchmark, covering your needs of proper x265 encoding benchmarking procedure for multithreaded CPUs ? Or do you have a suggestion how to build one ?

__________________

Win 10 x64 (19042.572) - Core i5-2400 - Radeon RX 470 (20.10.1) HEVC decoding benchmarks H.264 DXVA Benchmarks for all |

|

|

|

|

|

|

#28 | Link | |

|

RipBot264 author

Join Date: May 2006

Location: Poland

Posts: 7,812

|

Quote:

__________________

Windows 7 Image Updater - SkyLake\KabyLake\CoffeLake\Ryzen Threadripper |

|

|

|

|

|

|

#29 | Link | |

|

Registered User

Join Date: May 2009

Posts: 331

|

Quote:

Now hopefully someone will leak out something other than stupid geekbench results. I seriously want to see how well this generation will do in encoding. I don't care about games..I want encoding benchmarks. Sadly, I think we are going to have to wait till one of the forum members gets one as we know review sites don't exactly know how to benchmark when it's not games. |

|

|

|

|

|

|

#30 | Link | |

|

Moderator

Join Date: Jan 2006

Location: Portland, OR

Posts: 4,770

|

Quote:

Changing resolution can also really impact threading; more pixels mean fewer frame threads are needed on smaller core counts, reducing overhead. And of course, how well does encoding scale across multiple cores? Across the same or different NUMA nodes? |

|

|

|

|

|

|

#31 | Link |

|

Registered User

Join Date: Jan 2007

Posts: 729

|

https://www.ptt.cc/bbs/PC_Shopping/M...742.A.61C.html

This has a (unconfirmed) results for x265 benchmark used on HWBot. I think it has an older binary so gains from AVX256 might be a bit subdued, but the same would be true for the Intel chip. The benchmark tests and reports max single thread turbo clock at initialization so I think the screenshot means that these are scores for stock Ryzen 5 3600 and Core i7-8700K. Basically looks nice for Ryzen 3000 and I would wait for it and not get anything else, if this is confirmed. |

|

|

|

|

|

#32 | Link |

|

Registered User

Join Date: Feb 2002

Location: San Jose, California

Posts: 4,407

|

Very nice results, I hope they are true! It is looking like they probably are, with more leaks from other areas.

__________________

madVR options explained |

|

|

|

|

|

#36 | Link |

|

Registered User

Join Date: Dec 2002

Posts: 5,565

|

3.6 GHz is only the advertised base clock. The i9-9900K goes up to 5 GHz dynamically. If it's not limited to the default "95W" setting it can keep turbo clocks longer.

https://www.anandtech.com/show/13591...-power-for-sff |

|

|

|

|

|

#37 | Link | |

|

Moderator

Join Date: Jan 2006

Location: Portland, OR

Posts: 4,770

|

Quote:

|

|

|

|

|

|

|

#39 | Link |

|

Registered User

Join Date: Mar 2004

Posts: 1,125

|

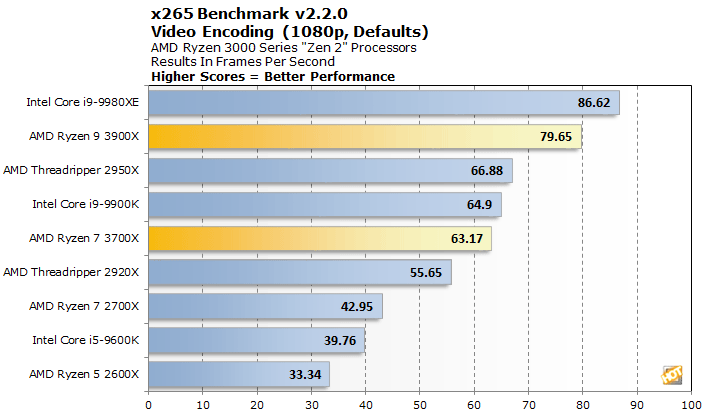

x265 and x264 benchmarks of the new Ryzen 3000 series chips:

handbrake v1.2.2:   staxrip x264 and x265 benchmarks: https://nl.hardware.info/reviews/939...4-x265-en-flac Last edited by hajj_3; 7th July 2019 at 15:02. |

|

|

|

|

| Thread Tools | Search this Thread |

| Display Modes | |

|

|